- Get link

- X

- Other Apps

Featured Post

- Get link

- X

- Other Apps

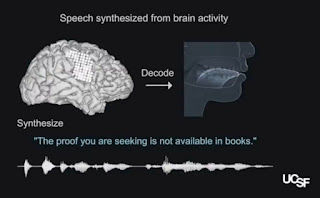

Speech Synthises From Brain: Here is the new technology has been introduced in University Of California, San Francisco which converts the thoughts of human into speech without him spoken.

Speech Synthesis From Brain

Scientists have harnessed artificial intelligence to translate brain signals into speech, in a step toward Brain implants that one day could let people with impaired abilities speak their minds, according to a new study.

In findings published Wednesday in the journal Nature, a research team at the University of California, San Francisco, introduced an experimental brain decoder that combined direct recording of signals from the brains of research subjects with artificial intelligence, machine learning and a speech synthesizer.

When perfected, the system could give people who can’t speak, such as stroke patients, cancer victims, and those suffering from amyotrophic lateral sclerosis—or Lou Gehrig’s disease—the ability to conduct conversations at a natural pace, the researchers said.

Virtual Vocal Tract Improves Naturalistic Speech Synthesis

The research was led by Gopala Anumanchipalli, PhD, a speech scientist, and Josh Chartier, a bioengineering graduate student in the Chang lab. It builds on a recent study in which the pair described for the first time how the human brain's speech centers choreograph the movements of the lips, jaw, tongue, and other vocal tract components to produce fluent speech.

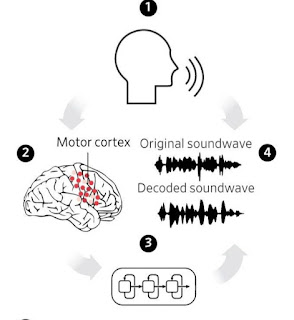

From that work, Anumanchipalli and Chartier realized that previous attempts to directly decode speech from brain activity might have met with limited success because these brain regions do not directly represent the acoustic properties of speech sounds, but rather the instructions needed to coordinate the movements of the mouth and throat during speech.

"The relationship between the movements of the vocal tract and the speech sounds that are produced is a complicated one," Anumanchipalli said. "We reasoned that if these speech centers in the brain are encoding movements rather than sounds, we should try to do the same in decoding those signals."

In their new study, Anumancipali and Chartier asked five volunteers being treated at the UCSF Epilepsy Center -- patients with intact speech who had electrodes temporarily implanted in their brains to map the source of their seizures in preparation for neurosurgery -- to read several hundred sentences aloud while the researchers recorded activity from a brain region known to be involved in language production.

Based on the audio recordings of participants' voices, the researchers used linguistic principles to reverse engineer the vocal tract movements needed to produce those sounds: pressing the lips together here, tightening vocal cords there, shifting the tip of the tongue to the roof of the mouth, then relaxing it, and so on.

This detailed mapping of sound to anatomy allowed the scientists to create a realistic virtual vocal tract for each participant that could be controlled by their brain activity. This comprised two "neural network" machine learning algorithms: a decoder that transforms brain activity patterns produced during speech into movements of the virtual vocal tract, and a synthesizer that converts these vocal tract movements into a synthetic approximation of the participant's voice.

The synthetic speech produced by these algorithms was significantly better than synthetic speech directly decoded from participants' brain activity without the inclusion of simulations of the speakers' vocal tracts, the researchers found. The algorithms produced sentences that were understandable to hundreds of human listeners in crowdsourced transcription tests conducted on the Amazon Mechanical Turk platform.

As is the case with natural speech, the transcribers were more successful when they were given shorter lists of words to choose from, as would be the case with caregivers who are primed to the kinds of phrases or requests patients might utter. The transcribers accurately identified 69 percent of synthesized words from lists of 25 alternatives and transcribed 43 percent of sentences with perfect accuracy. With a more challenging 50 words to choose from, transcribers' overall accuracy dropped to 47 percent, though they were still able to understand 21 percent of synthesized sentences perfectly.

"We still have a ways to go to perfectly mimic spoken language," Chartier acknowledged. "We're quite good at synthesizing slower speech sounds like 'sh' and 'z' as well as maintaining the rhythms and intonations of speech and the speaker's gender and identity, but some of the more abrupt sounds like 'b's and 'p's get a bit fuzzy. Still, the levels of accuracy we produced here would be an amazing improvement in real-time communication compared to what's currently available."

Artificial Intelligence, Linguistics, and Neuroscience Fueled Advance

The researchers are currently experimenting with higher-density electrode arrays and more advanced machine learning algorithms.

I hope you guys understand about how the Science works on the Speech Synthises from brain. If you like this article so please share it and you have any suggestion so leave a comment. See you soon on the next article.🤗

I hope you guys understand about how the Science works on the Speech Synthises from brain. If you like this article so please share it and you have any suggestion so leave a comment. See you soon on the next article.🤗

Comments

Post a Comment